Understanding AI Safety: A Critical Imperative for Business Leaders

In today's fast-evolving technological landscape, the convergence of artificial intelligence (AI) with business strategies is becoming increasingly essential. The safety and alignment of AI systems are paramount considerations that CEOs and marketing managers must take into account to mitigate potential risks while maximizing benefits.

Navigating the Risks of AI Misalignment

The development of AI technology is fraught with challenges, not least of which is the alignment problem. This refers to ensuring AI systems operate in accordance with human values and intentions. Misalignment could lead to unintended behaviors, posing significant risks. For instance, large language models (LLMs) like ChatGPT can exhibit biases or overconfidence, which can compromise their utility and ethical implications in business settings.

As voiced by experts, including those at AI Alignment Forum, businesses need to prioritize AI safety strategies that do not solely focus on technological enhancements but also consider governance, ethical frameworks, and robust oversight mechanisms.

The Importance of a Safety-First Culture

Establishing a culture centered on safety and ethics within an organization is vital. This includes fostering open communication about potential risks and ensuring that systems are designed with safety as a key tenet. A robust safety culture helps organizations identify and mitigate risks associated with AI technologies effectively.

As the AI Innovation and Ethics blog emphasizes, embedding principles of safety from the outset allows companies to benefit from AI advancements while maintaining accountability and integrity in their practices.

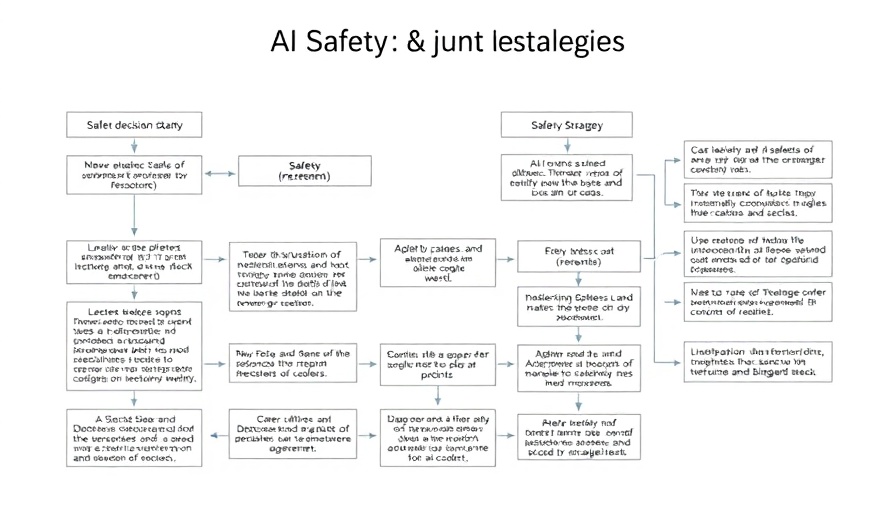

Strategies for AI Alignment

How can businesses engage effectively with AI safety? Here are key strategies:

- Scalable Oversight: Systems must be established to continually monitor AI behavior against ethical standards. This ensures that potential misalignments are identified early.

- Robustness: AI systems should be resilient to manipulation and maintain consistency across various environments. Developing robust models helps evade unintended consequences.

- Transparency: Increasing interpretability in AI decisions enhances trust and allows stakeholders to understand model outputs, thereby improving alignment with human values.

This multi-faceted approach underscores the necessity of merging technical and ethical considerations in AI deployment.

The Path Forward: Collaboration and Governance

Given the systemic risks introduced by AI, including biases and operational challenges, a coordinated effort across industries, academia, and regulatory bodies is essential. Like the historical example of nuclear governance, the rapid progress in AI requires a re-evaluation of our ethical frameworks and operational protocols.

By engaging in cooperative dialogues and fostering a global consensus on AI ethics, organizations can navigate the complexities of AI development more effectively. Implementing policies that manage AI risks not only protects the enterprise but also strengthens public trust in these powerful technologies.

Conclusion: A Call to Action

Leaders in tech-driven industries have a unique opportunity to shape the future of AI safely. Embracing best practices in AI safety will not only minimize potential threats but will also lead to enhanced societal trust in AI applications. As you consider the integration of AI within your organization, make ethics and alignment a priority to ensure the success of your strategies today and tomorrow.

Add Row

Add Row  Add

Add

Add Row

Add Row  Add

Add

Write A Comment